Working from home has become a standard operating practice for the semiconductor design industry.

Working from home is quickly becoming the norm. A recent survey by FlexJobs found that 70% of people would prefer a remote working environment over a traditional one. Working from home has become even more popular in the semiconductor design industry. Companies are now implementing design-aware data management for Hybrid Cloud to meet evolving design data management requirements. Why has working from home become so prevalent? There’s no definitive answer, but a few factors contribute to this trend. It’s not surprising then to see companies like Oracle and Intel putting their money on the table and supporting this way of living. This article will explore the pros and cons of leveraging hybrid cloud infrastructure for semiconductor companies and why design-aware data management for hybrid cloud offers the ideal solution.

Silicon chip shortage affecting on-premises data centers

The Covid-19 pandemic has forced us to adapt in many ways. There are always new challenges that need to be tackled immediately around every corner. More specifically, most of these have to do with disruptions in supply chains for products such as food/pantry items, toilet paper, computer desks, etc. The more recent news is the silicon chip shortage, which is why you can’t find your gaming console or purchase a car (loaded with electronics).

These days, it seems like everyone is talking about silicon chip shortages. But did you know that the shortage also affects IT teams deeply? The engineering workforce shifted almost overnight to working from home. Consequently, IT teams needed to keep up with a sudden and sustained rise in demand for compute servers, shared storage, and networking switches. In the early part of this pandemic, IT teams came up with creative ideas, such as converting conference rooms into makeshift server rooms to build additional capacity on-premises. But now, they’ve hit a crisis point with the silicon chip shortage, making it challenging to obtain server infrastructure to scale existing data centers. Scaling existing data centers is less of an option for companies who want their team members productive again!

Cloud computing for scale

The only available option at the moment is to look for cloud service providers who have been building capacity for many years now. In theory, with a workload powered by cloud infrastructure, the team can use the elasticity of the cloud to spin up 1000s of compute nodes within a matter of minutes. But as most things are, this is a lot easier said than done.

Traditionally Electronic Design Automation (EDA) and High-Performance Computing (HPC) workloads are optimized for local on-premises infrastructure with significant investment and optimization to make them work successfully. Lifting and shifting them to the cloud is a challenge, both on technical and economic fronts. That is why, at a high level, using a hybrid approach to infrastructure and marrying the on-premises data centers with the cloud is a lower-risk approach.

Data management challenges with cloud computing

While there are many challenges to tackle with the hybrid approach (e.g., security, latency, and cost), the hybrid model has a usability issue specific to the EDA flows. Typically, EDA flows depend on many files that may include but are not limited to Process Design Kits (PDKs), models, and Intellectual Property (IP) files that change frequently and asynchronously. When kicking off a simulation or verification job, the onus of having the correct version of files lies on the end-user manually. If the user makes errors, the penalty for lost time and resources is high.

At the onset, the solution to this high-risk problem seems easy. Let’s make all the files available from on-premises to on-cloud with a virtual file system. The cloud can fetch files as the workload needs it. It’s as simple as that! This solution is an infrastructure-based approach involving IT teams configuring on-premises hardware and software to solve a business problem.

But this is neither a novel solution nor does it work well in practice on any sizable production system. The file system is a core component of any computer operating system. The complexity and risk of using a new virtual filesystem is significant. If something goes wrong with the system, the entire team will crash. Furthermore, these types of filesystems give applications an illusion that they are in their rightful place when they aren’t physically present. The simulation and verification workload assumes its presence and operates accordingly. When it has to read the contents of a file, the virtual filesystem copies the bytes from on-premises via a potentially slow and unstable link. In a workload that depends on thousands of files, the expensive simulator, for example, could be simply waiting idly for a significant percentage of its runtime due to availability issues as well as time wasted by engineers. Way back in 1992, ClearCase used this type of approach for storing files– “The VOBs (Versioned Object Base) are down” was a common reason for long lunches at many ClearCase sites.

An intelligent design-aware approach to data management on cloud

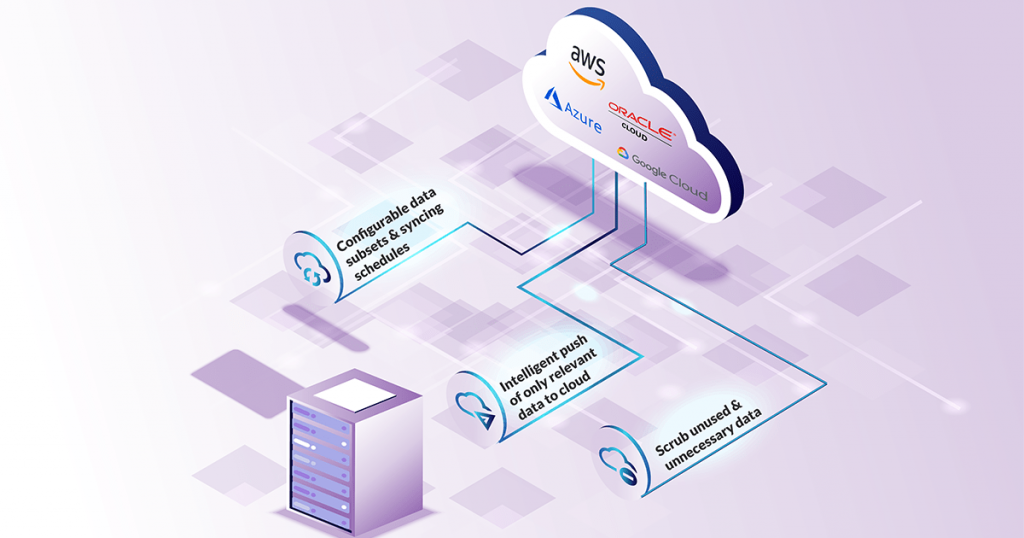

An alternative approach is to achieve the same results by implementing a workflow-based solution that focuses on the operational needs of the design teams. It works on the simple principle of operating and synchronizing the design data intelligently instead of a blanket approach of a virtual file system. Simply put, a design-aware design management system surgically collects and synchronizes the correct versions of design objects required for the workload without interaction from users or admins. The workload can then kick off the simulator with the assurance that the actual files necessary for the simulator are already present on the cloud.

Cliosoft’s SOS Design Management System has been using this approach to syncing data for 20 years. In the early 2000s, semiconductor companies adopted a follow the sun development strategy to hire talented engineers to address market needs. The globalization of teams meant new challenges in design and data management. Indiscriminately syncing filer volumes was neither practical given the state of the infrastructure at some of these sites nor advisable due to security and contractual compliance concerns. The only viable option at this time was to sync the data surgically. In response, CAD/IT teams built homegrown solutions using basic UNIX tools like rsync, SCP, and crontab. However, these solutions were hard to maintain and scale with the organization’s growth.

Using the cache functionality with Cliosoft design management system

Cliosoft SOS connects a primary data center (also known as a “site”) with multiple remote sites with a cache server. Users at the remote site connect to the data repository via the cache server at their site. A cache server in the SOS design management platform keeps track of users and the data each user uses at the site. Because the cache server “knows” usage patterns of its users, it can sync only the data required to the site; Nothing more or nothing less! Thus, the caching system limits the data required to sync between the primary and the remote site. Design teams accumulate stale data at each remote site as the design project progresses. Once set up, the cache can easily scrub it off with no human intervention. More than 350+ semiconductor companies have been using this technology for 20 years.

The design teams’ operational practices vary depending on their product development methodology and culture. For example, some design teams using the follow the sun methodology need the latest data available at the remote sites at 8:00 AM local time. In contrast, some other design teams adopt a more lazy approach and wait for the users to demand data to conserve bandwidth. The design team may need immediate access to data across all sites in some design methodologies. Also, the design team at a particular site may not need all of the project’s data at all sites. Cliosoft SOS can realize all of these design data management configurations.

Semiconductor design companies are looking to the cloud for scaling their workload with the organization’s Virtual Private Cloud as just another site for the Cliosoft system. With a few tweaks to workflow scripts, the cloud site is online. The engineers can fire off EDA workloads on thousands of cloud instances. This system would increase the design team’s productivity, scaling, and cost savings.

A surgical design-aware approach is needed

The prime advantages of this surgical design-aware approach over the naive volume sync approach are:

- More efficient and responsive synchronization system

- Eliminate unnecessary data egress with surgical syncs

- Fewer files and smaller sizes (GBs instead of TBs)

- Optimize cloud storage utilization automatically reducing human interaction and error

This way, the expensive simulator license and compute resources are invoked only on an as-needed basis, maximizing the ROI of using the cloud. The scrubbing of unused and unnecessary data from the cloud is an important and urgent issue to address for compliance and cost reasons. And all of this, with no human intervention from IT administrators or the user.

As the pandemic dies down, the legacy of the work from home continues to live as design teams realize the flexibility and productivity gains from this new model. Using the elasticity and scale of cloud computing is, more likely than not, here to stay.

Design-aware data syncing to the cloud is essential for setting up an efficient and effective design methodology and environment to maximize the productivity of design teams.